What I think of the AI hype

During the last year there have been many articles about the upcoming singularity and what will happen after that. Elon Musk or Stephen Hawking have issued warnings about the impending doom, while others are more optimistic and are hoping that cures for all diseases will be found, global warming will no longer be an issue and world peace will install.

However, I think that most of these predictions make some very strong assumptions, some of which might not be true.

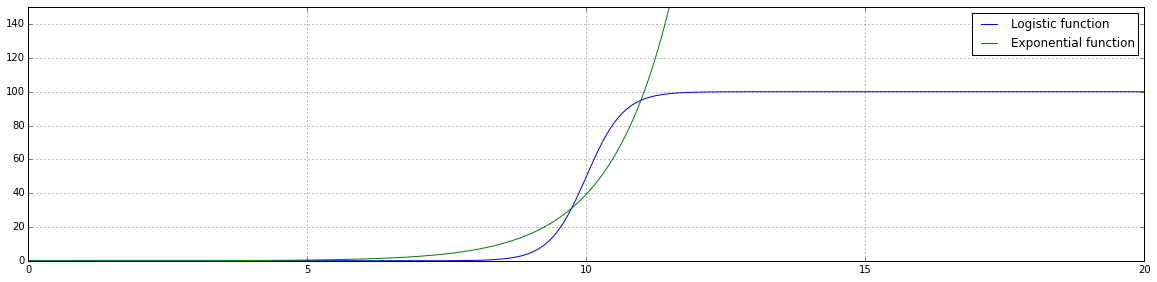

One of the main arguments is that technological improvements are exponential and we will get better stuff much faster then before. But there is a problem. Only the exponential function grows exponentially indefinitely. In real life, you know, the thing that you see when you look outside the window, there are some annoying pesky little things that are hard constraints, over which you cannot go. Small things like the speed of light or the size of an atom. Moore's law is already showing signs of slowdown. Even if somebody replaces silicon with carbon nanotubes, that will give us maybe ten more years, by enabling processors to use transistors that are below 10nm. But atoms are between 0.03 and 0.3 nm big themselves, so we can only shrink so far. So instead of exponential growth, we are more likely to have logistic growth.

As you can see, in the beginning the two functions are very similar. It is pretty much impossible to distinguish them in the first half of the graph. They both start off slowly, then start growing really quick. But then they diverge. The logistic function plateaus off, while the exponential one blows up. Unfortunately, in this physical world, the first one is the more common one.

Another problem I see is that people think having an AI will magically solve all problems, cure all diseases and so on. Unfortunately, those problems are pretty hard. And I don't mean it in the way that we don't know how to solve them, because we do, we just don't have the resources to do it. Things like protein folding, proving certain mathematical statements or finding the shortest way to visit all cities in a country belong to a category of problems known as NP-hard problems. This means that solving them takes exponential time. This means that if you have a problem that you want solve for an input of size n, it will take about \( 10^n \) steps to find a solution. For \( n=100 \), the result is for all intents and purposes infinite. The current estimates science gives us for the age of the universe are around \( 10^{17} \) seconds. The estimated number of atoms in the universe is about \( 10^{80} \). These are all ridiculously large numbers. Now let's presume that every atom in the universe worked for the age of the universe at a speed of 10 Ghz (\( 10^{10} \) Hz) operations per second. This means that they would do a grand total of \( 10^{17}∗10^{80}∗10^{10}=10^{117} \) steps. In 14 billion years. So they would barely finish solving our problem with the input of 100. There is no proof yet that there is no "efficient" algorithm for NP-hard problems, but most computer scientists believe so. So, even if we get an AI, we won't get a magical fix for global warming.

Another thing that I believe is that we are simply barking up the wrong tree. Even though there have been recently awesome advances in computer vision or natural language processing, these methods are still something like the following formula:

$$ min_{w, c} \frac{1}{2}w^T w + C \sum_{i=1}^n \log(\exp(- y_i (X_i^T w + c)) + 1) $$

The "only" difference between this logistic regression model, which was introduced in the 1940's, and the latest and greatest neural networks is that the latter ones repeatedly apply this formula, up to 22 times, for nested levels of X and y. Sure, the interpretation there is different, but neural networks are still glorified stacked matrix multiplications. I'm sorry, but I just don't believe an AI will somehow appear out of this. To get to something that resembles smart, we have to take completely new approaches, so we're quite far from that.

The one thing that I agree with is that computers can be dangerous. Just look at the Flash Crash of the stock market from 2010. Somebody sold a lot of stock suddenly, all the algorithmic trading computers jumped in, ???, 1 trillion dollars vanished, ???, 40 minutes later it's back. And we have only vague ideas about what happened. And I don't want to mention the long list of other computer errors that happened in the past, sometimes with many human lives being lost as a consequence. These kind of problems I think are much bigger than ones that are going to be caused by an AI and we are going to start seeing them more and more, simply because of the law of large numbers. If an error happens only once every 1 billion times a certain computer function is run, then probably that function from 1970 to 2000 wasn't called 1 billion times. But now that many web services have hundreds of millions of users and are getting called billions of times a day, it's only a matter of time before something fails really badly.